A web crawler, also commonly referred to as a spider, bot, or simply a crawler, is a program or script that navigates the World Wide Web to fetch page content. Its primary purpose is to systematically browse and index web page content so that search engines can provide faster and more accurate search results. Web crawlers are widely used in various applications, including search engines that employ crawlers to gather web page information and build vast indexing databases. They are also used to scrape data from websites for market analysis, competitor analysis, and other purposes such as monitoring changes in web content like price fluctuations, news updates, etc. Additionally, crawlers collect information in specific fields for academic research and more.

How do Web Crawlers Work?

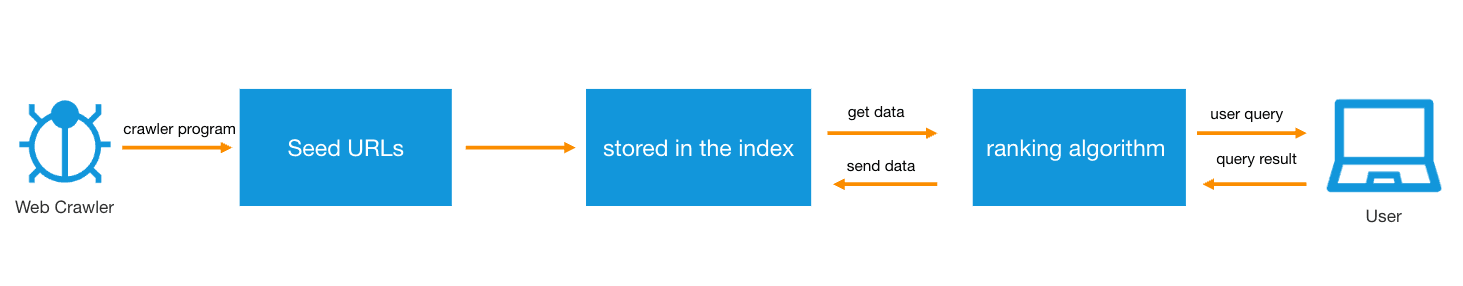

Web crawlers typically start with a set of known URLs, known as seed URLs. The crawler first visits the web pages at these URLs. During the visit, the crawler downloads the page content and parses the HTML code to extract useful information such as text, images, and videos.

At the same time, the crawler identifies and extracts all hyperlinks on the page. The extracted links are added to the crawler's queue of pages to crawl. This process allows the crawler to expand from the initial seed URLs to more web pages. Crawlers need to search billions of web pages. To achieve this, they follow paths primarily determined by internal links. If page A links to page B in its content, the bot can move from page A to page B based on the link and then process page B. This is why internal linking is so important for SEO. It helps search engine crawlers find and index all the pages on your website.

Before crawling any web page, the crawler checks the site's robots.txt file to understand the rules set by the website administrator about which pages can be crawled and which cannot. Crawlers do not blindly crawl all pages but decide the crawling priority of pages based on a series of metrics, such as the number of times a page is linked by other web pages, traffic volume, and the potential importance of the page content. This helps optimize resource use, prioritizing the crawling of the most valuable data.

As the content on the Internet constantly updates and changes, crawlers need to periodically revisit crawled pages to ensure that the information in the index is up-to-date. Crawlers store the fetched data and process and index it so that it can be quickly retrieved by search engines.

Different search engines may customize the behavior of their crawlers based on their needs and objectives. For example, some search engines may prioritize crawling sites with high user traffic or strong brand influence.

Difference between Crawling and Scraping

Web scraping (also known as data scraping or content scraping) typically refers to the act of downloading website content without the website's permission. This behavior may be for malicious purposes, such as using scraped content for illegal copying or publishing. In contrast, web scraping is often more targeted, possibly limited to specific pages or specific sites.

Web crawling involves systematically accessing web pages by following links to continuously crawl pages. Web crawlers, especially legitimate ones like those used by search engines, may consider the stress on network servers during execution, adhering to the provisions of the robots.txt file and appropriately limiting their request frequency to reduce server load.

Scraping tools are used to extract data from web pages or entire websites. While these tools can be used for legitimate purposes such as data analysis and market research, malicious actors also use these tools to steal and republish website content, potentially infringing copyrights and stealing the original site's SEO results.

There are four basic types of web crawlers:

- Focused crawlers search and download web content related to a specific topic and index it. These crawlers only focus on links they consider relevant, rather than browsing every hyperlink on a webpage like standard web crawlers.

- Incremental crawlers revisit sites to refresh indexes and update URLs.

- Parallel crawlers run multiple crawling processes simultaneously to maximize download rates.

- Distributed crawlers use multiple crawlers at the same time to index different websites.

Common web crawlers include:

- Google, Googlebot (actually two crawling tools, Googlebot Desktop, and Googlebot Mobile, used for desktop and mobile device searches)

- Bingbot, the crawler for Microsoft's search engine

- Amazonbot, Amazon's web crawler

- DuckDuckBot, the crawler for the search engine DuckDuckGo

- YandexBot, the crawler for the Yandex search engine

- Baiduspider, the web crawler for the Chinese search engine Baidu

- Slurp, Yahoo's web crawler

- Coupon applications, such as Honey

Web crawler tools are numerous, each with its own features, suitable for different needs and technical levels. Here are some common web crawler tools:

- Scrapy: A very powerful open-source web crawler framework developed in Python. Scrapy is suitable for complex web crawling tasks, supports asynchronous request processing, and can quickly fetch large amounts of data.

- Beautiful Soup: A Python library for parsing HTML and XML documents. It can be easily used with Python's Requests library to extract data from web pages.

- Selenium: Although mainly used for automating web page applications, Selenium can also be used as a web crawler, especially suitable for interactive scraping tasks such as simulating clicks and form submissions.

- Puppeteer: A Node.js library that can control a headless version of Chrome or Chromium browser, suitable for scraping JavaScript-heavy applications.

- Curl: A tool that works with URL syntax in command lines or scripts, which can be used to fetch web page content and files.

- Wget: A command-line tool for automatically downloading files

- Apache Nutch: This is a highly scalable open-source web crawler software project. Nutch can run on Apache Hadoop clusters, making it suitable for large-scale data scraping.

- Octoparse: This is a user-friendly, no-code web scraping tool that allows users to quickly set up scraping tasks through a visual interface.

- ParseHub: This is a modern visual data scraping tool that supports complex data scraping needs, such as handling AJAX and JavaScript. ParseHub offers a clean graphical interface that

- enables non-technical users to easily scrape data.

- Common Crawl: This is not a tool but an open-source project that provides a large-scale dataset of web pages. It offers researchers and companies access to pre-scraped web data, which can be used directly without the need for their crawling.

These tools each have unique features, and users can choose the appropriate tool for web scraping based on their needs and technical abilities. For users with strong programming skills, Scrapy and Beautiful Soup offer great flexibility and control; for those seeking simple and easy-to-use solutions, tools like Octoparse and ParseHub provide user-friendly interfaces and quick data scraping capabilities.

The Impact of Web Crawlers on SEO

Search Engine Optimization (SEO) refers to the practice of making it easier for users to search for specific types of content, products, or services on a website. Websites that are not easily crawlable rank lower on Search Engine Results Pages (SERPs). Websites that cannot be crawled at all will not appear on the results pages. To improve search engine rankings, SEO teams need to eliminate errors such as missing page titles, duplicate content, and broken links on the website, as these can increase the difficulty of crawling and indexing the site.

The Relationship Between Bot Management and Crawlers

Malicious bots accessing your site can cause significant damage, ranging from poor user experience and server crashes to data theft, escalating over time. However, while blocking malicious bots, it is important to still allow the access capabilities of benevolent bots like web crawlers. Tencent EdgeOne Bot management allows benevolent bots to continue accessing the website while also reducing malicious bot traffic. This product automatically updates the whitelist of benevolent bots such as web crawlers, ensuring their smooth operation and providing visibility and control over their bot traffic at a similar level.

If you intend for your website to be protected from malicious attacks while also allowing reasonable crawler access to enhance your SEO capabilities, feel free to try Tencent EdgeOne for protection and acceleration.