With the widespread use of the internet and technological advancements, bot attacks have emerged as a significant security threat. This article aims to define bot attacks, discuss different types of attacks, highlight potential impacts, and provide preventive measures to counter these attacks.

What are Bot Attacks?

The bot is short for robot, and it refers to an automated program or script that can simulate human user behavior and perform various tasks. Bots can operate on the internet and carry out actions such as automatically responding to messages, scraping web content, or executing specific computational tasks. They can be designed for beneficial purposes, such as web crawlers used for search engine indexing or chatbots used for customer service. However, some bots are also maliciously used for activities like conducting cyber attacks, spreading spam, or stealing personal information. These malicious bots are often referred to as malware or part of a botnet, and they can automate attack behaviors, posing a threat to network security.

Bot attacks refer to malicious activities carried out on network systems using automated programs (bots or scripts). These bots can simulate human user behavior and automatically perform various tasks such as scanning for vulnerabilities, sending spam emails, brute-forcing passwords, and more. The purpose of bot attacks is to gain illegal benefits, disrupt the normal operation of network systems, or steal sensitive information. These attacks are often conducted on a large scale using automation, allowing attackers to target a significant number of victims within a short period, thereby increasing the success rate of the attack. Bot attacks pose a serious security threat to individuals, organizations, and the entire network ecosystem.

Types of Bot Attacks

Web Scraping Bots

A web crawling bot, also known as a web spider or web scraper, is an automated program designed to retrieve web page content from the internet. They are used for browsing and indexing web pages to support the functionality of search engines. Web crawling bots follow predefined rules and algorithms to automatically access web pages and extract information such as text, images, and links. This data is then used to build indexes for search engines, enabling users to find relevant web pages through search queries.

Web crawling bots typically adhere to the crawling rules defined by websites, such as those specified in the robots.txt file, to ensure they do not impose excessive burdens on websites or violate privacy. They crawl web pages at a certain frequency and depth while respecting the access restrictions set by websites.

However, some web crawling bots may be maliciously used for illegal purposes, such as stealing sensitive information, invading privacy, or engaging in phishing activities. These malicious web crawling bots may violate website crawling rules to illegally obtain data or launch attacks on websites. As a result, website administrators often take measures to detect and block access from malicious web crawling bots to protect the security of their websites and users.

Spam Bots

A spam bot is an automated program designed to send a large volume of spam emails. They are created for purposes such as advertising, spreading malware, and engaging in online scams. Spam bots can automatically collect and generate a vast number of email addresses, which they use to send spam emails.

These bots typically scan websites, forums, social media, and other sources to gather email addresses. They may also utilize email address lists from infected computers or botnets. Once they have accumulated a sufficient number of email addresses, spam bots automatically send out a massive amount of spam emails, often containing advertisements, fraudulent messages, malicious links, or attachments.

Spam bots pose a significant nuisance and security threat to individuals and organizations. They consume network bandwidth and storage space, and can potentially spread malware, deceive users into providing personal information, or engage in financial fraud. Common measures to combat spam bots include using anti-spam filters, educating users to recognize spam emails, and strengthening network security measures to prevent bot infiltration.

Brute-Force Bots

A brute force bot is an automated program designed to attempt to crack passwords or bypass security measures of restricted systems. They do this by trying a large number of possible password combinations or system access methods to find valid credentials or circumvent security measures.

Brute force bots typically employ dictionary attacks or brute force methods. A dictionary attack involves using a pre-prepared list of passwords for attempts, while brute force involves trying all possible password combinations to crack a password. These bots can automate the password attempts, rapidly iterating through a large number of combinations to find valid credentials.

Brute force bots pose a threat to the security of systems and accounts. They can attempt to crack user accounts, email accounts, network servers, and more to gain access to sensitive information, engage in unauthorized access, or carry out malicious activities. Common preventive measures against brute force bots include using strong passwords, limiting login attempts, enabling multi-factor authentication, monitoring for abnormal login activity, and utilizing intrusion detection systems to detect and block brute force attempts.

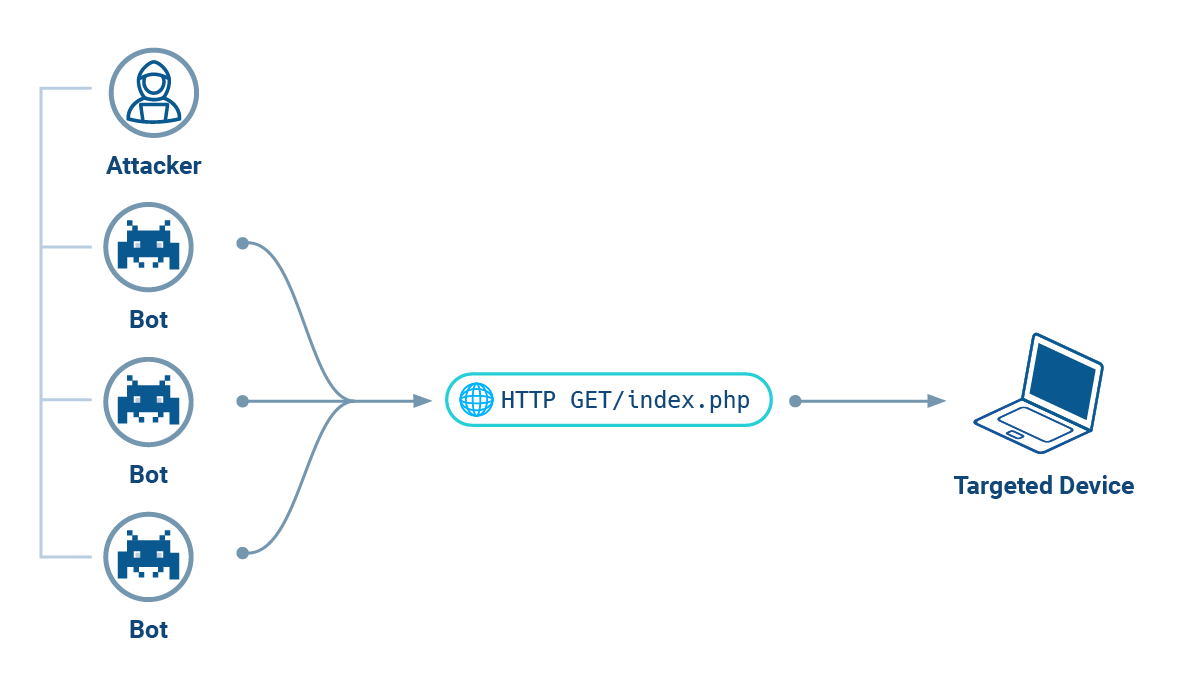

DDoS Bots

A DDoS bot, or Distributed Denial of Service bot, is an automated program used to launch Distributed Denial of Service (DDoS) attacks. DDoS attacks aim to overwhelm a target server by simultaneously sending a large volume of requests, rendering it unable to respond to legitimate user requests.

DDoS bots are typically part of a botnet, which is a group of compromised computers or devices. These bots are controlled by a central command server, through which the attacker can send instructions to coordinate the attack. Upon receiving the instructions, DDoS bots flood the target server with a massive amount of request traffic, exceeding its processing capacity and causing service unavailability or slow response times.

DDoS bot attacks pose a significant threat to target servers and networks. They can result in service disruptions, website crashes, and network congestion, and may even serve as a smokescreen for other malicious activities. Common defense measures against DDoS bot attacks include using firewalls, intrusion detection systems, load balancers, and traffic filters to filter and block malicious traffic. Additionally, traffic analysis tools are used to detect and respond to abnormal traffic patterns. Collaboration and coordination with Internet Service Providers (ISPs) and security teams are also crucial in mitigating DDoS attacks.

Web Crawling Bots

A web crawling bot, or web crawler, is an automated program used to browse and collect information from the internet. They are designed to automatically access web pages and extract data for purposes such as search engine indexing, data mining, and monitoring website changes.

Web crawling bots navigate the internet by following predefined rules and algorithms. They start from a given starting point and then progressively visit and fetch other relevant web pages through link tracking and parsing. These bots gather information such as content, links, metadata, and store or transmit it to subsequent processing programs.

Web crawling bots play a crucial role on the internet, enabling search engines to build indexes and provide accurate search results. They are also used in areas such as data mining, market research, and competitive intelligence gathering. However, some web crawling bots can have negative impacts on website performance and bandwidth, leading website administrators to take measures to restrict or block their access.

It is important to note that while most web crawling bots are legitimate, there are also malicious ones used for illegal purposes such as data theft, content duplication, and phishing. Therefore, protecting websites from malicious web crawling bot attacks is also an important security measure.

Potential Impacts of Bot Attacks

Robot attacks can have various impacts on the targeted systems, networks, and users. Here are some common effects:

- Service disruption: Robot attacks can cause the targeted system or network to experience service disruptions, rendering them unable to function or provide services. This can have a significant impact on business operations, user experience, and reliability.

- Website crashes: Large-scale robot attacks can lead to website crashes or unavailability. This makes the website inaccessible, affecting user access and interaction.

- Bandwidth consumption: Robot attacks generate a significant amount of network traffic, consuming the targeted system's bandwidth resources. This can result in network congestion, slowing down, or destabilizing network connections for legitimate users.

- Data leakage: Some robot attacks aim to obtain sensitive information or steal data. Attackers may use robot attacks to gain access to user accounts, personal information, financial data, and other sensitive information, leading to privacy breaches and security risks.

- Masking malicious activities: Robot attacks are sometimes used as a distraction to mask other malicious activities. Attackers may utilize robot attacks to divert the attention of security teams, enabling them to carry out more destructive attacks or intrusions.

- Damage to brand reputation: Robot attacks can have a negative impact on the brand reputation of the targeted organization or company. If users cannot access the website or experience data breaches, it can lead to a loss of trust and create a negative impression of the company's image.

Preventive Measures Against Bot Attacks

To prevent robot attacks, you can take the following measures:

- Identify and filter robot traffic: Use robot detection techniques such as CAPTCHA, IP blacklisting, user agent detection, etc., to identify and filter robot traffic. This can block access from most malicious robots.

- Use CAPTCHA: Implement CAPTCHA for critical operations such as registration, login, password reset, etc., to ensure that only real users can complete these actions, not robots.

- Limit access frequency: Set access rate limits to restrict the frequency of requests from individual IP addresses. This prevents robots from launching attacks at high speeds and reduces the strain on the system.

- Implement a robot protocol: Implement a robot protocol on your website that clearly defines the allowed access behavior and restrictions for robots. This helps identify and block malicious robots.

- Use a Web Application Firewall (WAF): A WAF can detect and block robot attacks by filtering and blocking malicious traffic, protecting your website and applications.

- Regularly update and patch vulnerabilities: Keep your website and applications up to date by promptly updating and patching any vulnerabilities. This reduces the risk of successful robot attacks, as vulnerabilities can be exploited by attackers.

- Monitor and analyze traffic: Use traffic monitoring and analysis tools to detect abnormal traffic patterns and behaviors, helping to identify and respond to robot attacks promptly.

- Establish cooperation and coordination: Collaborate and coordinate with Internet Service Providers (ISPs) and other security teams to collectively combat robot attacks. Sharing intelligence and cooperating in defense can enhance the ability to respond to robot attacks.

How does Tencent EdgeOne Manage Bot?

Tencent EdgeOne Bot management is a service that maintains the quality of your website traffic. Among your website visitors, there may be a portion of visits that are not initiated by real users, but by automated programs, which we usually call bots. Although some bots (e.g., search engine crawlers) are beneficial to the website, but they may also trigger the above attacks. Tencent EdgeOne Bot management will process requests in the following order:

- Exception rule: Release specific requests so that they do not apply to the bot management module. For example, traffic from specified IPs of partners or test traffic carrying specific User-Agent.

- Custom bot rules: Customizable and flexible bot management rules, supporting multiple identification mechanisms and providing flexible disposal options. For example, delay the response of half of the automated shopping cart crawlers and silently dispose of the other half.

- Basic bot management: Identify bot tools and control them by combining the User-Agent header and client IP within the request with the corresponding features of search engines and tools.

- Client reputation: Identify malicious bots and provide control by combining the client IP with the threat intelligence database.

- Bot intelligence: Bot intelligence analysis is suitable for situations where rapid deployment, identification, and analysis of website traffic patterns are needed. Bot intelligence analysis is based on a clustering analysis algorithm and a big data model intelligence engine, aiming to help you comprehensively judge the risk of requests from multiple perspectives and more conveniently use Bot management to quickly identify and deal with known or unknown bots, avoiding fixed single strategies being bypassed.

- Active detection: Identify human browser clients (not applicable to native mobile apps) by verifying the client's runtime environment and access behavior through Cookie and JavaScript.