Edge AI: The Convergence of Cloud Computing and AI

In 2006, Google's then-CEO Eric Schmidt first introduced the concept of "cloud computing" at a search engine conference, marking the official beginning of the cloud computing era. Since then, the cloud computing market has matured, and its application fields have continuously expanded, not only completely changing the operational mode of the IT industry but also promoting the process of digital transformation across various industries. At this stage, it became clear to everyone that the era of the cloud and cloud computing had arrived, and people eagerly climbed onto this metaphorical "cloud" filled with endless possibilities.

In the 1950s, the concept of AI was first introduced at the Dartmouth Conference. Over the decades, this lofty technological concept has gone through stages of anticipation, skepticism, prosperity, and stagnation, until December 2022, when OpenAI launched ChatGPT. Built on the Generative Pre-trained Transformer (GPT) model, ChatGPT was fine-tuned using a combination of supervised learning and reinforcement learning. ChatGPT's exceptional language understanding and generation capabilities made people realize that the era of AI, once only seen in science fiction movies, was on its way!

So, is AI, a concept born early but famous late, about to replace its successor, cloud computing, as the darling of the new era? My answer is no. The rapid development of AI technology indeed signifies that we have entered a new era of intelligent processing and decision-making, but this does not mean that AI has made cloud computing obsolete. In fact, there is a complementary relationship between AI and cloud computing. They are not substitutes for each other but can be deeply integrated to jointly promote technological progress and industrial upgrading.

This article will explore the role of cloud computing in AI, edge AI architecture, edge AI solutions, and other issues, demonstrating that cloud computing and AI complement each other's strengths, working together to usher in a smarter, more efficient new era of technology.

From Cloud Computing to Edge Serverless

What is Cloud Computing

Cloud computing is an upgrade to the internet architecture. It virtualizes computing, storage, network, and other resources to form a virtual resource pool, which is more resource and cost-efficient compared to previous physical machines and easier to manage. There are three common service models in cloud computing: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS):

- Infrastructure as a Service (IaaS): Cloud providers offer virtualized computing resources. Users can rent virtual machines, storage, and network components to build and manage their own IT infrastructure.

- Platform as a Service (PaaS): Cloud providers offer a complete platform, including development tools, middleware, and runtime environments for building, testing, and deploying applications. This simplifies the application development process, allowing developers to focus on coding.

- Software as a Service (SaaS): Cloud providers offer software applications on a subscription basis, allowing users to access and use them without the need for local installation or maintenance of the software applications.

Cloud Computing & Serverless

Serverless is an evolutionary form of cloud computing architecture that more effectively utilizes cloud resources and abstracts the complexity of managing these resources. The Serverless architecture brings the following advantages:

- Cost-effectiveness: Charging is based on the resources actually used by the consumer, which means businesses can increase or decrease resources according to demand, avoiding expensive upfront hardware investments and maintenance costs.

- Scalability and flexibility: The cloud allows businesses to quickly increase or decrease resources based on actual needs, whether it's storage space, computing power, or bandwidth. This flexibility is crucial for responding to market changes and business peak periods.

- Global deployment: Cloud platforms typically have data centers distributed worldwide, enabling businesses to deploy services and applications globally, optimizing performance and reducing latency while ensuring data compliance with local regulations.

- Data backup and disaster recovery: Provides efficient automated backup and recovery capabilities, ensuring data security and integrity. Good recovery capability is key to ensuring business continuity in the event of system failures or data loss.

- Security and compliance: Offers a higher level of security measures compared to traditional IT environments. Additionally, cloud services help businesses meet various industry-specific compliance requirements, such as GDPR, HIPAA, etc.

What is Edge Serverless

As data volumes explode and user demands for response speed increase, the processing pressure on Serverless data centers will also grow. To address this challenge, Edge Serverless has emerged. Edge Serverless migrates data processing and analysis tasks from central servers to edge devices closer to the data source, thereby reducing the latency and cost of data transmission. This not only improves the speed of data processing but also enhances the reliability and security of the system.

In July 2017, Amazon released Lambda@Edge, a solution for deploying functions to the edge in containerized form. In 2021, they further launched Cloudfront Functions based on new virtualization and isolation technologies, further optimizing cold start latency and cost. In September 2017, Cloudflare released Workers, an edge function-as-a-service solution based on the Chromium V8 engine, achieving millisecond-level cold start latency. In 2019, they introduced KV store, a globally distributed key-value database that can be used in conjunction with Workers. In the same year, Akamai launched Edge Worker, with similar functionality and performance to Cloudflare Workers. Unlike Cloudflare's technical approach, Fastly invested in WebAssembly research and developed their own Lucet compiler and runtime. In 2021, they officially released Compute@Edge, reducing cold start latency to microsecond levels.

TencentCloud Edge Functions

Tencent Cloud EdgeOne Edge Functions represent an innovative form of edge Serverless application, integrating Serverless architecture with CDN platforms. By deploying function code on edge nodes closer to users, it achieves edge-based data processing. This means that when a user initiates a request, data is processed and analyzed directly on the edge node. This significantly reduces data transmission latency and improves response times.

EdgeOne Edge Functions inherit many advantages of Serverless architecture. Developers don't need to worry about the operation and management of underlying infrastructure, and can focus solely on writing and deploying function code, greatly improving development efficiency. At the same time, benefiting from the cost advantages of Serverless architecture and edge servers, using EdgeOne Edge Functions can significantly reduce enterprise hardware usage and maintenance costs.

From Edge Serverless to Edge AI

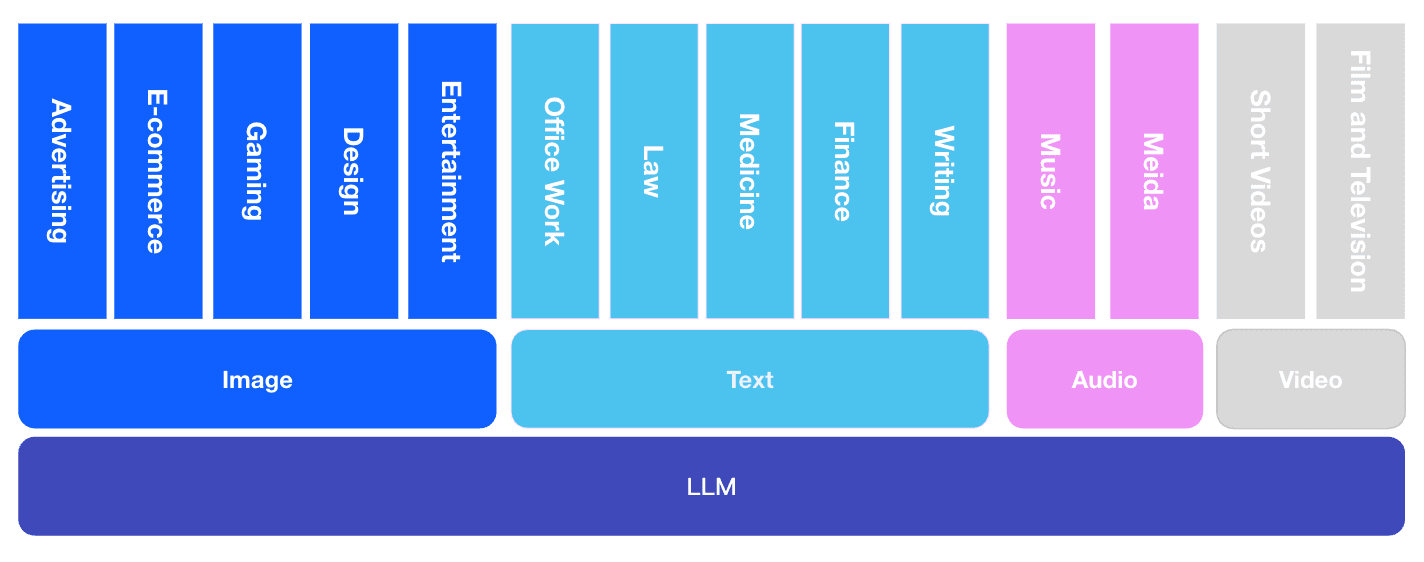

Large Language Models (LLMs) have become the focus of industry attention due to their powerful natural language processing capabilities and wide-ranging application prospects. However, behind the rapid development of these large models, issues of computing power and cost have become increasingly prominent, presenting a dual challenge that AI practitioners must face.

The massive parameters and complex neural network structures of LLMs place extremely high demands on computational power. These models require vast amounts of data for training to ensure their accuracy and generalization ability. As the scale of models continues to expand, the required computational resources are growing exponentially. To meet the computational needs of large LLMs, AI practitioners must invest heavily in upgrading computing equipment, adopting advanced computational resources such as high-performance GPUs and TPUs, all of which bring additional costs that will ultimately be passed on to consumers.

The contradiction between high computational costs and ever-increasing computational demands has become a major challenge for AI practitioners. Fortunately, the deepening application of distributed computing and Serverless architecture systems provides new ideas and methods for solving this problem.

For AI applications, the advantages of Serverless architecture are particularly evident. Firstly, it can avoid waste caused by idle computing resources, as resources are only allocated when actually used. Secondly, Serverless architecture can automatically scale to handle sudden computational demands, avoiding service interruptions or performance degradation due to insufficient resources.

What is Edge AI

Edge AI is a technological concept that combines AI with edge Serverless computing. It refers to the technology of running artificial intelligence algorithms and models on local devices or edge nodes close to the data source. Its core idea is to move data processing and analysis from the cloud to locations closer to where data is generated, thereby achieving faster response times, lower latency, and enhanced privacy protection. This technology has wide applications in areas such as smartphones, wearable devices, smart homes, autonomous vehicles, and industrial Internet of Things (IoT).

The main advantages of Edge AI include real-time response capability, offline working ability, sensitive data protection, and network bandwidth savings. By processing data locally, it reduces the need to transmit large amounts of data to the cloud, thereby improving the overall efficiency and security of the system.

Edge AI Architecture

The edge Serverless architecture combined with AI model management, fine-tuning, inference, and the scheduling and balancing of computational resources form a comprehensive one-stop service architecture for edge AI:

- Hardware Layer is the foundation of the Edge AI architecture, including various hardware resources and AI acceleration chips. These hardware components need to provide sufficient computing power under limited power consumption to support the operation of AI models.

- Model Layer is the core of Edge AI, including optimized and compressed machine learning or deep learning models. These models are typically trained in the cloud and then deployed to edge devices. Model optimization techniques such as quantization, pruning, and knowledge distillation are widely applied to adapt to the resource constraints of edge devices.

- Software Layer serves as the bridge connecting hardware and AI applications, including operating systems, drivers, middleware, and various development tools. This layer is responsible for managing hardware resources, optimizing performance, and providing API interfaces for upper-layer applications. Many vendors offer specialized Edge AI software development kits (SDKs) and runtime environments to simplify the development process.

- Application Layer is the part directly facing users, including various specific AI applications such as image recognition, speech processing, anomaly detection, etc. This layer utilizes the capabilities provided by the lower layers to implement specific business logic and user interactions.

Edge AI Process

Taking the most typical AI application scenario - text-to-image generation - as an example, the calling and inference process of Edge AI is as follows:

- Client Request: The client calls the image generation API;

- Task Scheduling: Generate a task ID and write the task into the queue; the image generation module retrieves tasks from the task queue and calls the inference cluster for processing.

- Image Generation: The inference cluster performs image generation inference;

- Result Storage: Generated results are stored in cloud storage components;

- Image Post-processing: Perform additional processing on the generated images, such as creating preview images or watermarked images;

- Content Distribution: Processed images are distributed to clients through CDN;

- Client Response: After the client receives the URL of the generated result, it accesses the URL to retrieve the content;

The collaboration of components such as task queue, image generation module, inference cluster, image processing, cloud storage components, and CDN realizes the full-process management from image generation to distribution. Clients can obtain the generated image content through simple API calls.

Edge AI Development Status

According to statistics from MarketsandMarkets, the edge computing market size is expected to grow from $60 billion in 2024 to $110.6 billion by 2029, with a Compound Annual Growth Rate (CAGR) of 13.0% during the forecast period. The strongest driving force for this growth comes from edge AI.

Integrating artificial intelligence (AI) and machine learning (ML) algorithms into edge computing devices enables real-time data analytics and decision-making at the network edge. Edge-based AI/ML applications, such as predictive maintenance, anomaly detection, and personalized content delivery, leverage local processing capabilities to analyze data in near real-time, enabling organizations to derive actionable insights and improve operational efficiency. AI/ML integration drives the edge computing market by allowing real-time data analysis and decision-making at the edge.

By deploying AI and ML algorithms closer to where data is generated, organizations can extract valuable insights faster, optimize operations, and enhance user experiences. This integration also reduces the need for extensive data transmission to centralized cloud servers, minimizing latency and bandwidth requirements while improving edge computing solutions' overall efficiency and responsiveness.

EdgeOne Edge AI Sulotions

Edge AI faces several challenges, such as the limitations of computing resources on terminal devices, power consumption management, and the need for model optimization and compression. EdgeOne's edge AI solution organically combines EdgeOne Edge Functions with edge AI to provide users with powerful edge data generation and processing capabilities. The design of EdgeOne's edge AI solution aims to simplify the deployment and invocation process of AI models, enabling users to easily implement advanced AI models on edge devices, thereby optimizing operational efficiency and cost-effectiveness.

EdgeOne Edge AI Architecture

EdgeOne Edge AI solution has innovated and upgraded based on the conventional edge AI architecture, constructing a more comprehensive and efficient multi-level architectural system. It fully exploits the advantages of edge computing to provide powerful support for various AI applications.

Serverless Layer:

- Edge Functions Runtime;

- Edge Static Resource Processing Service;

- Edge Storage Components;

Model Layer:

- Model Management, Model Fine-tuning Training, Model Inference Service;

- Model Scheduling, GPU Memory Capacity Management, Computational Resource Utilization Monitoring;

- Model Performance Feedback and Evaluation;

Computing Layer:

- Heterogeneous Computing Resource Management and Invocation;

Edge Functions With AI

The invocation of edge AI will be provided in the form of AI APIs, offering developers the ability to call AI inference within Edge Functions code.

async function handleEvent(event) {

let args = event.request.json();

const response = await AI.run("@tencent/xxx", {

prompt: args.prompt,

negative_prompt: args.negative_prompt,

...

});

let result = response.json();

let image = base64ToArrayBuffer(result.image);

return new Response(image, {

headers: {

"Content-Type": result.content_type,

}

});

}

addEventListener('fetch', event => {

event.respondWith(handleEvent(event));

})AI Functions Deployment

EdgeOne's one-click global deployment feature allows developers to easily deploy their Edge Functions code to globally distributed nodes, achieving low-latency, high-availability, and elastic services. This feature not only simplifies the deployment process but also ensures stable operation and efficient service of AI functions globally by adapting to various environments and providing security guarantees.

AI Functions Scheduling

EdgeOne's edge AI technology is not limited to improving data processing speed and reducing latency. It can also maintain stability and reliability under different environments and conditions. Even in situations where computational resources are insufficient, it can ensure business continuity through methods such as elastic scheduling.

Contact Us

Edge AI is in a rapid development stage with enormous potential. With the upcoming launch of EdgeOne's edge AI solution, we look forward to exploring with you how edge AI can bring revolutionary changes to your business. If you are interested in EdgeOne's edge AI solution, or would like to learn more about how to optimize your business processes using edge AI, please contact us.

Tencent EdgeOne is an advanced content delivery network (CDN) and edge computing platform developed by Tencent Cloud, which provides Edge Solutions for Acceleration, Security, Serverless, and Video. We have now launched a free trial, please click here for more information.