Mastering Docker: A Comprehensive Guide to Containerization, Build, and Deployment

Docker has revolutionized the way applications are developed, deployed, and managed by providing a lightweight, portable, and consistent environment for running software. As a cornerstone of modern software development, Docker offers a powerful and flexible way to package applications through containerization, ensuring they run consistently across different environments—from development to production—thus eliminating the notorious "it works on my machine" problem. At the heart of Docker's functionality is the build process, which transforms a Dockerfile into a Docker image, creating the blueprint for containers that can run anywhere Docker is installed.

This article aims to provide a comprehensive introduction to Docker, covering its core concepts, key features, the essential Docker build process, practical use cases, and advanced techniques. Whether you're a developer looking to streamline your workflow or an IT professional seeking to optimize application deployment, this guide will equip you with the knowledge to harness the full potential of Docker and create efficient, lightweight, and secure containerized applications.

What is Docker?

Docker is an open-source platform that automates the deployment, scaling, and management of applications using containerization technology. Launched in 2013, Docker has revolutionized how software is built, shipped, and run by making containers accessible and practical for everyday development and operations.

At its core, Docker is built around the concept of containers. A container is a lightweight, standalone, executable package that includes everything needed to run a piece of software: code, runtime, system tools, libraries, and settings. Containers are isolated from one another and the host system, ensuring that applications run consistently regardless of the environment.

Key Components of Docker

Docker's architecture consists of several key components:

- Docker Engine: The runtime that builds and runs containers using Docker commands.

- Docker Images: Read-only templates that contain a set of instructions for creating containers. Images are built in layers, making them efficient to store, transfer, and update.

- Dockerfiles: Text files containing instructions to build Docker images automatically. They specify base images, file operations, environment configuration, and commands to run.

- Docker Containers: Running instances of Docker images. Containers encapsulate an application and its dependencies, executing in isolation.

- Docker Registry: A repository for storing and distributing Docker images. Docker Hub is the default public registry, but private registries are also common.

- Docker Compose: A tool for defining and running multi-container Docker applications using a YAML file to configure application services.

Docker vs. Virtual Machines

Unlike traditional virtual machines (VMs), Docker containers don't require a full operating system for each instance. Instead, containers share the host system's OS kernel while maintaining isolation. This approach provides several advantages:

- Efficiency: Containers use fewer resources than VMs, starting in seconds rather than minutes.

- Portability: Containers run consistently across any environment that supports Docker.

- Density: A single machine can run many more containers than VMs.

- Speed: Docker's lightweight nature enables rapid development, testing, and deployment cycles.

Benefits and Use Cases

Docker has transformed modern software development with benefits including:

- Consistency: "It works on my machine" issues are eliminated as development environments match production.

- Microservices Architecture: Docker facilitates breaking applications into smaller, independent services.

- CI/CD Integration: Containers streamline continuous integration and delivery pipelines.

- Scalability: Container orchestration platforms like Kubernetes can automatically scale containerized applications.

- Isolation: Applications run in their own environments without interfering with one another.

- Version Control: Container images can be versioned, allowing for easy rollbacks and updates.

Docker is widely used across industries for application deployment, development environments, microservices architecture, data processing, and cloud-native application development.

Docker Ecosystem

The Docker ecosystem includes numerous tools that enhance its capabilities:

- Kubernetes: Container orchestration platform for automating deployment and scaling

- Docker Swarm: Docker's native clustering and scheduling tool

- Docker Desktop: Development environment for building and testing containerized applications

- Harbor: Enterprise registry service for storing and distributing Docker images

By abstracting away infrastructure differences and packaging applications into portable units, Docker has become a fundamental technology in modern software development and deployment pipelines.

How Docker Works

1. Container Fundamentals

At its core, Docker leverages several Linux kernel features to create isolated environments called containers. Understanding these underlying technologies reveals how Docker achieves lightweight virtualization:

Linux Namespaces

Docker uses Linux namespaces to provide isolation for various system resources. Each container gets its own set of namespaces, creating a layer of isolation:

- PID namespace: Isolates process IDs, so processes in one container cannot see or affect processes in other containers or the host

- Network namespace: Provides isolated network stacks, including interfaces, routing tables, and firewall rules

- Mount namespace: Gives containers their own view of the filesystem hierarchy

- UTS namespace: Allows containers to have their own hostname and domain name

- IPC namespace: Isolates inter-process communication mechanisms

- User namespace: Maps user and group IDs between container and host, enhancing security

Control Groups (cgroups)

Docker uses cgroups to limit and account for resource usage:

- Restricts CPU, memory, disk I/O, and network bandwidth

- Prevents containers from consuming excessive resources

- Enables resource allocation prioritization

- Provides monitoring capabilities for container resource usage

Union File System

Docker containers rely on a layered approach to filesystem management:

- Combines multiple directory trees into a single unified view

- Implements copy-on-write strategy for efficiency

- Common implementations include OverlayFS, AUFS, and Btrfs

- Enables Docker's lightweight and fast filesystem operations

2. The Container Lifecycle

Container Creation Process

When you run 'docker run image_name', Docker performs these operations:

- Checks for the image locally: If not found, pulls from the configured registry

- Creates a new container instance: Allocates a writable layer on top of the image layers

- Sets up networking: Creates virtual interfaces and connects to specified networks

- Initializes storage: Mounts volumes and configures storage drivers

- Executes the startup command: Runs the command specified in the image's CMD or ENTRYPOINT instruction

Container States

Containers exist in various states throughout their lifecycle:

- Created: Container is created but not started

- Running: Container processes are active

- Paused: Container processes are suspended

- Stopped: Container processes have exited, but the container still exists

- Deleted: Container is permanently removed

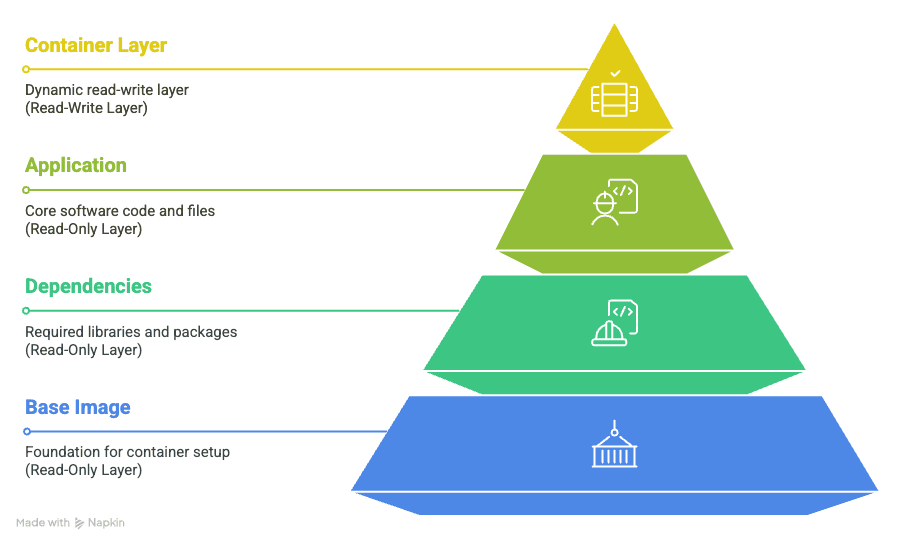

3. The Image Layer System

Layer Architecture

Docker images consist of a series of read-only layers, each representing a Dockerfile instruction:

Copy-on-Write (CoW) Mechanism

When a running container needs to modify a file from a read-only layer:

- The file is copied up to the writable container layer

- Modifications are made to the copy in the container layer

- The original file in the read-only layer remains unchanged

- Other containers using the same image see the original, unmodified file

This mechanism enables:

- Resource efficiency (shared base layers)

- Quick container startup (no need to copy the entire filesystem)

- Storage optimization (unchanged files aren't duplicated)

4. Docker Networking

Docker implements several networking modes to support various communication requirements:

Bridge Networking

The default network mode:

- Creates a private network between containers on the same host

- Containers get private IP addresses (typically 172.17.0.0/16 range)

- Port mapping allows exposing container ports to the host

- Containers can communicate using these private IPs

# Run container with port mapping

docker run -p 8080:80 nginxHost Networking

Removes network isolation between the container and the host:

- Container uses the host's network stack directly

- No need for port mapping

- Potential port conflicts with host services

- Better network performance

# Run container with host networking

docker run --network=host nginxOverlay Networking

For multi-host container communication:

- Enables containers on different hosts to communicate

- Used primarily in Docker Swarm

- Implements VXLAN encapsulation for network traffic

- Supports container-to-container encryption

Custom Networks

Docker allows creating user-defined networks:

- Provides better isolation

- Enables DNS resolution between containers

- Allows attaching containers to multiple networks

- Supports network driver plugins

# Create a custom network

docker network create --driver bridge my_network

# Run container on custom network

docker run --network=my_network --name my_app my_image

5. Data Persistence

Docker provides several mechanisms for data persistence across container restarts:

Volumes

The preferred method for persistent data:

- Managed by Docker

- Stored in a part of the host filesystem (/var/lib/docker/volumes/)

- Independent of the container lifecycle

- Can be shared between containers

- Support for volume drivers (local, NFS, cloud storage)

# Create volume

docker volume create my_data

# Use volume with container

docker run -v my_data:/app/data my_imageBind Mounts

Map host directories directly into containers:

- Relies on the host filesystem structure

- Good for development workflows

- Allows direct access to files from the host and the container

- Potential security implications

# Use bind mount

docker run -v /host/path:/container/path my_imagetmpfs Mounts

Store data in the host system memory:

- Never written to the host filesystem

- Good for sensitive information

- Data is lost when the container stops

- Reduces disk I/O for ephemeral data

# Use tmpfs mount

docker run --tmpfs /app/temp my_image6. Container Communication

Inter-container Communication

Containers can communicate through:

- Docker networks: Using container names as DNS hostnames

- Shared volumes: Reading/writing to common storage

- Environment variables: Passing configuration between containers

- Docker Compose: Defining multi-container applications

External Communication

Containers communicate with the outside world via:

- Port mapping: Exposing container ports to the host

- Host networking: Direct access to host network interfaces

- Gateway connectivity: Using the docker0 bridge as the default gateway

Understanding these fundamental mechanisms provides insight into how Docker creates isolated, portable environments that are both lightweight and powerful. This architecture enables Docker's core benefits: consistency across environments, efficient resource utilization, and rapid application deployment.

How to Use Docker

Docker provides a powerful way to containerize applications, making them portable and consistent across different environments. This guide walks you through the essential steps to get started with Docker, from installation to creating your own custom images and managing multi-container applications.

1. Installing Docker

For Windows

- Download Docker Desktop for Windows

- Run the installer and follow the instructions

- Start Docker Desktop from the Windows Start menu

For macOS

- Download Docker Desktop for Mac

- Drag Docker to your Applications folder

- Launch Docker from your Applications

For Linux (Ubuntu)

# Update package index

sudo apt-get update

# Install prerequisites

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg \

lsb-release

# Add Docker's official GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# Set up stable repository

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# Install Docker Engine

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io

# Add your user to the docker group (to run Docker without sudo)

sudo usermod -aG docker $USERVerify installation by running:

docker --version

docker run hello-world2. Understanding Basic Docker Commands

Container Management

# Run a container

docker run nginx

# Run in detached mode (background)

docker run -d nginx

# Run with a name

docker run --name my-nginx -d nginx

# Map ports (host:container)

docker run -d -p 8080:80 nginx

# List running containers

docker ps

# List all containers (including stopped)

docker ps -a

# Stop a container

docker stop container_id_or_name

# Start a stopped container

docker start container_id_or_name

# Remove a container

docker rm container_id_or_name

# Force remove a running container

docker rm -f container_id_or_name

# View container logs

docker logs container_id_or_name

# Execute command inside a running container

docker exec -it container_id_or_name bashImage Management

# List available images

docker images

# Pull an image from Docker Hub

docker pull ubuntu:20.04

# Remove an image

docker rmi image_id_or_name

# Search Docker Hub for images

docker search mysql3. Working with Docker Images

Running Containers from Images

# Run a specific version of an image

docker run ubuntu:20.04

# Run interactively

docker run -it ubuntu:20.04 bash

# Run with environment variables

docker run -e MYSQL_ROOT_PASSWORD=my-secret-pw -d mysql:8

# Run with volume mounting

docker run -v /host/path:/container/path -d nginxManaging Docker Volumes

# Create a named volume

docker volume create my_data

# List volumes

docker volume ls

# Use a named volume with a container

docker run -v my_data:/app/data -d my_app

# Inspect a volume

docker volume inspect my_data

# Remove unused volumes

docker volume prune4. Building Custom Docker Images

Creating a Dockerfile

Create a file named Dockerfile (no extension):

# Start from a base image

FROM node:14-alpine

# Set the working directory

WORKDIR /app

# Copy package.json and install dependencies

COPY package*.json ./

RUN npm install

# Copy application code

COPY . .

# Expose a port

EXPOSE 3000

# Define the command to run

CMD ["node", "index.js"]Building the Image

# Build an image from a Dockerfile in current directory

docker build -t my-app:1.0 .

# Build with a specific Dockerfile

docker build -f Dockerfile.dev -t my-app:dev .

# Build with build arguments

docker build --build-arg NODE_ENV=production -t my-app:prod .Optimizing Docker Builds

Use .dockerignore: Create a .dockerignore file to exclude unnecessary files:

node_modules

npm-debug.log

.git

.envLayer Optimization: Order Dockerfile instructions to maximize cache utilization:

- Put instructions that change less frequently at the top

- Group related commands to reduce layers

Multi-stage Builds: Reduce final image size by using multiple stages:

# Build stage

FROM node:14 AS builder

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Production stage

FROM nginx:alpine

COPY --from=builder /app/build /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]5. Docker Compose for Multi-Container Applications

Creating a docker-compose.yml File

version: '3'

services:

web:

build: ./web

ports:

- "8000:8000"

depends_on:

- db

environment:

DATABASE_URL: postgres://postgres:example@db:5432/mydb

volumes:

- ./web:/code

db:

image: postgres:13

environment:

POSTGRES_PASSWORD: example

POSTGRES_DB: mydb

volumes:

- postgres_data:/var/lib/postgresql/data

volumes:

postgres_data:Using Docker Compose

# Start services

docker-compose up

# Start in detached mode

docker-compose up -d

# Stop services

docker-compose down

# Stop and remove volumes

docker-compose down -v

# View logs

docker-compose logs

# Execute command in a service

docker-compose exec web bash

# Build or rebuild services

docker-compose build6. Docker Networking

# List networks

docker network ls

# Create a network

docker network create my-network

# Run a container on a specific network

docker run --network=my-network --name myapp -d my-image

# Connect a running container to a network

docker network connect my-network container_name

# Inspect network

docker network inspect my-network7. Debugging Docker Containers

# Inspect container details

docker inspect container_id

# View container stats (CPU, memory)

docker stats container_id

# Get a shell inside a running container

docker exec -it container_id sh

# Copy files from/to containers

docker cp container_id:/path/to/file ./local/path

docker cp ./local/file container_id:/path/in/container

# View container logs

docker logs --tail 100 -f container_id8. Best Practices for Using Docker

Security

- Use official or verified images

- Keep images updated with security patches

- Don't run containers as root

- Scan images for vulnerabilities using

docker scan - Never store secrets in your images

Performance

- Use multi-stage builds to create smaller images

- Only install the necessary packages

- Remove package manager caches

- Use Alpine-based images when possible

Development Workflow

- Use Docker Compose for local development

- Mount source code as volumes for development

- Use different Dockerfiles for development and production

- Implement CI/CD pipelines for Docker builds

Container Management

- Use meaningful container names and image tags

- Set resource limits for containers

- Implement health checks

- Use container orchestration for production (Kubernetes, Docker Swarm)

- Regularly clean up unused containers, images, and volumes:

docker system prune -aBy following this guide, you'll be able to use Docker effectively for developing, testing, and deploying applications in consistent environments. As you become more comfortable with these basics, you can explore more advanced features like Docker Swarm, Docker secrets, and integrating Docker with CI/CD pipelines.

Practical Use Cases of Docker

1. Development Environments

Creating Consistent Development Setups

Docker allows developers to create standardized development environments that match production setups. By defining dependencies and configurations in a Dockerfile, teams can ensure that everyone is working with the same environment, reducing setup time and minimizing compatibility issues.

Simplifying Dependency Management

Managing dependencies can be a complex and error-prone process. Docker simplifies this by encapsulating all dependencies within a container, ensuring that the application always runs with the correct versions of libraries and frameworks.

2. Deployment and Production

Container Orchestration with Kubernetes

Kubernetes is a popular container orchestration platform that works seamlessly with Docker. It allows you to manage large-scale deployments of containers, providing features like auto-scaling, load balancing, and self-healing. Docker's integration with Kubernetes makes it easy to deploy and manage containerized applications at scale.

Continuous Integration and Delivery (CI/CD)

Docker is a key component of modern CI/CD pipelines. By containerizing applications, you can automate the build, test, and deployment processes, ensuring that changes are quickly and reliably rolled out to production.

3. Microservices Architecture

Benefits of Using Docker for Microservices

Microservices architecture involves breaking down applications into smaller, independent services that communicate over a network. Docker is well-suited for this approach, as it allows each microservice to be packaged and deployed as a separate container. This modularity improves scalability, maintainability, and resilience.

Example of a Microservices Deployment

Imagine a web application composed of multiple microservices, such as a user service, authentication service, and payment service. Each service can be containerized using Docker, with Docker Compose defining the relationships between them. This setup allows for independent scaling and updates, making the application more robust and easier to manage.

4. Edge Computing and IoT

Running Containers on Resource-Constrained Devices

Docker's lightweight nature makes it ideal for edge computing and IoT applications. Containers can be deployed on resource-constrained devices, such as Raspberry Pis or embedded systems, enabling the execution of complex applications in a small footprint.

Use Cases in IoT Ecosystems

In IoT ecosystems, Docker can be used to manage and deploy software updates to devices, ensuring that they run the latest versions of applications. This approach simplifies device management and reduces the risk of downtime.

Docker Ecosystem and Community

Docker Hub and Container Repositories

1. Finding and Using Official Images

Docker Hub provides a vast library of official images for popular applications and operating systems. These images are maintained by the Docker community and are regularly updated to ensure security and reliability. To use an official image, simply pull it from Docker Hub using the docker pull command.

2. Publishing Your Own Images

You can also publish your own custom images to Docker Hub. To do this, first create an account on Docker Hub and then tag your image with your Docker Hub username and repository name. For example:

docker tag my_image username/repository:tag

docker push username/repository:tagThis process makes your image available to others in the Docker community.

3. Managing Private Repositories

Docker Hub also supports private repositories, allowing you to store and share images securely within your organization. Private repositories are useful for protecting sensitive or proprietary code.

Integration with Other Tools

1. CI/CD Tools (Jenkins, GitLab CI)

Docker integrates seamlessly with CI/CD tools like Jenkins and GitLab CI, enabling automated build, test, and deployment pipelines. By containerizing your application, you can ensure that the same environment is used throughout the CI/CD process, reducing the risk of deployment issues.

2. Cloud Platforms (AWS, Azure, Google Cloud)

All major cloud platforms provide support for Docker, allowing you to deploy and manage containerized applications in the cloud. For example, AWS offers Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS), while Azure provides Azure Kubernetes Service (AKS), and Google Cloud offers Google Kubernetes Engine (GKE). These services provide scalable, managed environments for running Docker containers.

3. Monitoring and Logging Tools (Prometheus, ELK Stack)

Monitoring and logging are critical for maintaining the health and performance of containerized applications. Docker integrates with popular monitoring tools like Prometheus and logging solutions like the ELK Stack (Elasticsearch, Logstash, Kibana), providing comprehensive visibility into your application's behavior.

Docker Community Resources

1. Forums and Support

The Docker community is active and supportive, with forums like Docker Community Forums and Stack Overflow providing a wealth of information and assistance. If you encounter issues or have questions, these platforms are excellent places to seek help.

2. Contributing to Docker Projects

Docker is an open-source project, and contributions from the community are always welcome. You can contribute to Docker by submitting bug reports, feature requests, or even code changes. Participating in the Docker community is a great way to stay up-to-date with the latest developments and enhance your skills.

3. Docker Meetups and Conferences

Docker hosts regular meetups and conferences around the world, providing opportunities to network with other Docker users, learn from experts, and stay informed about the latest trends in containerization. Attending these events can be a valuable way to deepen your understanding of Docker and its ecosystem.

EdgeOne Pages: Rapid Deployment of Web Applications

EdgeOne Pages is a powerful web application deployment platform designed for modern web development. It leverages Tencent EdgeOne's global edge network to provide high-performance, scalable, and efficient deployment solutions for both static and dynamic websites. This platform is ideal for developers looking to quickly build and deploy web applications with minimal setup and management overhead.

- Global Acceleration and Fast Deployment: Utilize Tencent Cloud’s global CDN network for rapid content delivery worldwide. Deploy your static pages in seconds.

- Edge Serverless and Modern Framework Support: Write server-side logic at the edge without managing servers. EdgeOne Pages supports popular frontend frameworks like React, Vue, and Next.js.

- Seamless GitHub Integration: Automate deployment with GitHub integration. Every commit triggers an automatic build and deployment, simplifying your workflow.

- Free and Unlimited Traffic: Enjoy free service with unlimited traffic and daily build quotas during the public beta, making it ideal for personal projects and small teams.

- Easy Setup and High Reliability: Set up easily with minimal configuration. Built-in error handling ensures high availability and stability, keeping your content accessible.

EdgeOne Pages provides a robust platform for deploying Markdown to HTML converters, ensuring that your content is delivered quickly and efficiently to users around the world.

Conclusion

Docker has revolutionized the way applications are developed, deployed, and managed, offering unparalleled flexibility, portability, and scalability. By leveraging Docker's powerful features and techniques, developers and IT professionals can streamline their workflows, ensure consistency across environments, and confidently deploy applications. As containerization continues to gain traction in the industry, mastering Docker is essential for anyone involved in modern software development. Whether you are just starting your journey with Docker or looking to expand your knowledge, the resources and community support available make it easier to unlock this transformative technology's full potential.