Introducing Open Edge on EdgeOne: A Comprehensive Guide to the Open Edge Serverless Development Platform and AI Gateway Features

What is Open Edge?

To encourage more developers to participate, collaborate, and improve edge applications, Tencent EdgeOne has created an open technology co-creation platform for developers called Open Edge. We further open up our edge node capabilities across the globe, allowing you to explore and build next-generation Serverless applications with us. It also offers an out-of-the-box experience with various application choices, supporting and promoting developers to jointly explore and build a new generation of edge Serverless applications.

Open Edge Architecture Overview

The Open Edge architecture is designed to provide a seamless experience for developers working on edge computing applications. It consists of three main layers: the Serverless Application Layer, the Edge Component Layer, and the Computing Layer. Each layer plays a crucial role in ensuring the efficient implementation and operation of edge applications.

1. Serverless Application Layer

Focusing on lightweight applications, the Serverless Application Layer provides a maintenance-free and out-of-the-box experience for developers. Currently, we have made the AI Gateway application available for free to help developers manage and control access to large language models (LLMs). Additional applications, including Poster Generation, Real-time Transcoding, and Text-to-Image, are in development and will be available soon.

2. Edge Component Layer

The Edge Component Layer consists of core components that are essential for the implementation of edge applications. These components include Edge Functions, Edge Cache, Edge KV, Edge COS, and Edge AI. These building blocks enable developers to create robust, high-performance applications that can efficiently process and manage data at the edge of the network.

3. Edge Computing Layer

The Edge Computing Layer provides the necessary computing power for the implementation of edge applications. It includes heterogeneous resources such as CPU and GPU, which ensure that applications can efficiently process and analyze data in real-time. This layer plays a critical role in enabling edge applications to deliver low-latency and high-performance results, essential for many use cases in industries such as IoT, AI, and real-time analytics.

What is Open Edge Capable of?

The core Advantages of Open Edge are:

- Serverless Architecture: Utilizing serverless architecture improves resource utilization efficiency and reduces operational complexity.

- Free Service: Offering basic free application services, reducing the initial cost for developers.

- Out-of-the-box Experience: Users can quickly start using the service without complicated settings.

- Co-creation Platform: Encouraging developers to participate, collaborate, and improve applications together.

- Multiple Application: Support Providing a diverse range of application choices to meet different needs.

By joining Open Edge, you will enjoy the following benefits:

- Free to activate.

- The AI Gateway is currently available for developers to apply for a free trial. It can manage and control access to large language models (LLMs) and supports major model service providers such as Open AI, Minimax, Moonshot AI, Gemini AI, Tencent Hunyuan, Baidu Qianfan, Alibaba Tongyi Qianwen, and ByteDance DouBao.

We look forward to having you join Open Edge and use technology to change the world!

What is AI Gateway?

1. Feature Overview

Tencent EdgeOne AI Gateway provides security, visibility, and request behavior control management for accessing large language model (LLM) service providers. Currently, the AI Gateway is available for developers to apply for a free trial.

AI Gateway has supported cache configuration capabilities, and features under development include rate limiting, request retries, LLM model fallback, and virtual keys. The combination of these capabilities effectively ensures the security and stability of accessing LLM service providers while reducing access costs.

Note: This is currently in the Beta version, and we do not recommend using it in a production environment. If you want to try it for your production business, please carefully consider the business risks before using it.

2. Applicable Scenarios

- Enterprise Office: Suitable for enterprise managers to set up an AI Gateway between employees and LLM service providers, controlling the secure access and cost control of employees to LLM service providers.

- Personal Development: Suitable for AIGC personal developers to set up an AI Gateway between consumer users and LLM service providers, controlling the request behavior of consumer users.

3. Feature Advantages

- Cost Reduction: Utilizing caching technology, repeated Prompt requests will be directly provided with responses from the cache, eliminating the need to call LLM service providers again. This effectively avoids unnecessary duplicate costs, significantly reducing your operational expenses.

- Flexible Configuration: Capabilities such as request retries, rate limiting, and LLM model fallback can be configured to handle various exceptions and complex scenarios, ensuring service availability.

- Data Monitoring: Through the data dashboard, you can obtain detailed statistical information about AI Gateway requests. This data will help you gain insights into traffic patterns, optimize business processes, and make more accurate business decisions.

- High Security: The virtual key technology provides an extra layer of security. This mechanism ensures that your LLM service provider's access keys will not be leaked, thereby protecting your data security and business privacy.

Note: Not all of the above capabilities are currently available. If you are particularly interested in certain capabilities, please provide feedback to the product team.

4. LLM Service Providers

Support for major model service providers such as Open AI, Minimax, Moonshot AI, Gemini AI, Tencent Hunyuan, Baidu Qianfan, Alibaba Tongyi Qianwen, and ByteDance DouBao is already available, with more providers under development.

Operation Guide

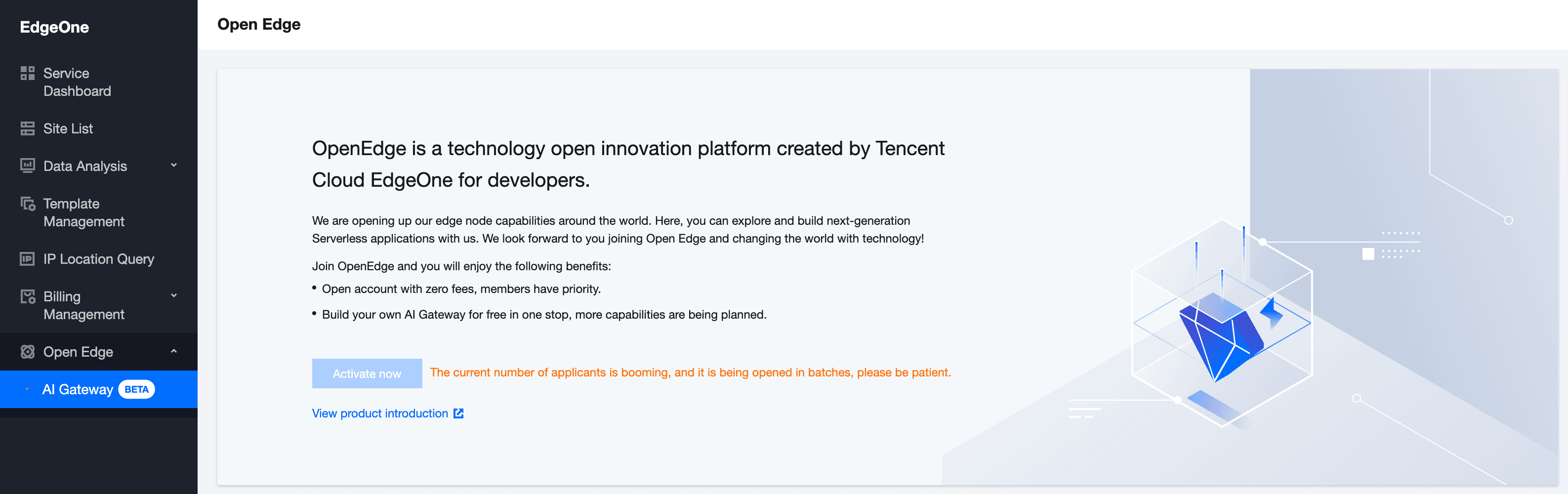

1. Activate Open Edge

To access Open Edge, go to the Tencent EdgeOne Console and click on Open Edge in the left sidebar. If you haven't applied for access before, you will need to request usage permissions by clicking on "Activate Now".

Note: As we are currently conducting limited user testing in batches, if your application is not approved at this time, please be patient and wait for further updates. We appreciate your understanding.

2. Create an AI Gateway

After successful activation, go to the AI Gateway list page and click "Create." Follow the pop-up prompts to complete the input of the name and description.

- Name: Required field, cannot be modified after creation, and can only contain numbers, upper and lower case letters, hyphens, and underscores; duplicate names are not allowed.

- Description: Optional field, with a maximum length of 60 characters.

3. Configure AI Gateway

After successfully creating the AI Gateway, go to the AI Gateway list page and click on "Details" or the specific AI Gateway instance ID to enter the gateway's details page. Currently, cache configuration is supported.

- Enable/Disable: Turn on the switch to enable caching, allowing the gateway to respond directly from its cache for the same Prompt requests without requesting LLM service providers. Turn off the switch to disable caching, and each request will be handled by the LLM service provider.

- Set Cache Duration: Supported cache durations include 2 minutes, 5 minutes, 1 hour, 1 day, 1 week, and 1 month. After the set duration, the cache will be automatically cleared.

4. API Endpoint

The corresponding Endpoint for the AI Gateway backend is the large language model (LLM) service provider. Currently, major model service providers such as Open AI, Minimax, Moonshot AI, and Gemini AI are supported.

You can refer to the example and replace Bear's xxxxxxxxxxxxxxxxxxxxxxxx with the access key of the service provider, such as Open AI's Endpoint.

Case Demonstration

Accessing Open AI through AI Gateway

Operational Scenario: Disabling cache in AI Gateway and accessing Open AI via the AI Gateway.

Enabling cache in AI Gateway and accessing it again.

If the response header OE-Cache-Status returns as HIT, it indicates that the cache has been hit.

To access other LLM service providers through the AI Gateway, you can follow the same steps as described above.

FAQ

1. Is AI Gateway free?

Yes, the AI Gateway is now open for developers to apply for a free trial.

2. What can AI Gateway do?

The AI Gateway enables management and control of access to large language models (LLMs) and currently supports major model service providers such as OpenAI, Minimax, Moonshot AI, and Gemini AI.

3. Can the AI Gateway support more LLM service providers?

If the current LLM service providers do not meet your requirements, please feel free to contact us for an evaluation.