Nowadays, organizations are focusing on evolving their technical processes to expedite IT infrastructure development. Rapid deployments to up-to-date platforms are crucial for this progression. Serverless functions and container technologies are increasingly becoming the two main methods used for hosting these deployments. The reasons can be described from the following aspects:

The popularity of cloud computing: With the continuous development and popularity of cloud computing technology, more and more enterprises and individuals have begun to migrate their applications or services to the cloud. This has also facilitated the emergence and development of new cloud computing technologies such as Serverless and containers.

Application complexity: With the increasing complexity of applications, traditional deployment methods can no longer meet the requirements. Traditional deployment methods need to consider many factors, such as hardware, operating system, network environment, etc., and technologies such as Serverless and containers can help developers deploy and manage applications more easily.

Elastic scaling needs: With the continuous growth of users and data volumes, applications need to have elastic scaling capabilities to cope with peak traffic. Technologies such as Serverless and containers can help applications automate elastic scaling to better meet user needs.

In this article, we will explore their key distinctions and provide criteria to help you decide which technology is best suited for your upcoming project.

What is Serverless Computing?

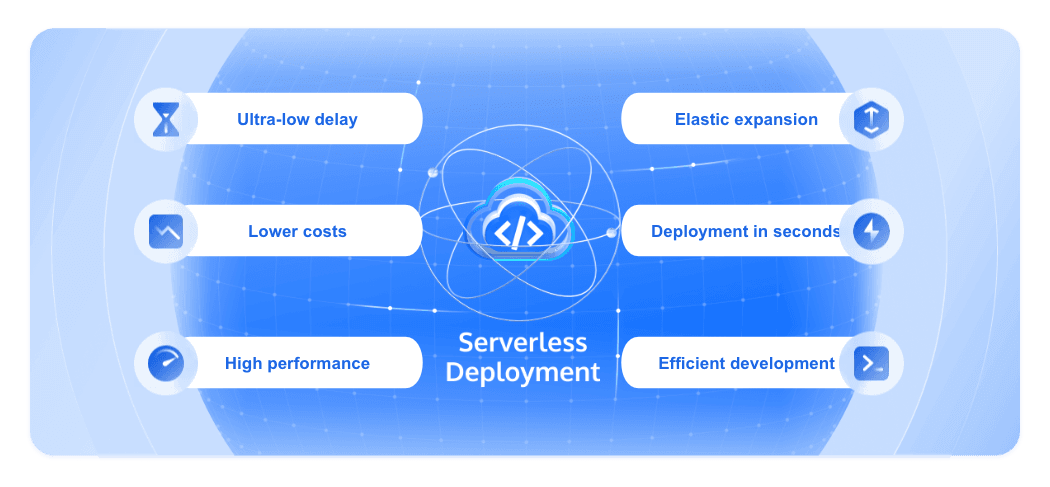

Serverless Computing is a cloud computing execution model in which a cloud provider dynamically manages the allocation of machines. In this model, the cloud service provider dynamically allocates resources and handles server management, scaling, and capacity planning. Developers can focus solely on writing and deploying their code, as the provider takes care of operational aspects.

Serverless Computing is also known as Function as a Service (FaaS), where individual functions or pieces of code are executed in response to specific events or triggers. These functions are stateless and can scale automatically to handle the number of incoming requests. This approach enables developers to create highly scalable and cost-effective applications, as they only pay for the actual compute time and resources consumed during the function execution. Learn more about serverless.

Advantages

Automatic scaling: Serverless computing can automatically scale up or down based on the application's needs. This means that if your application traffic increases, serverless platforms can automatically increase resources to meet demand.

Cost-effective: In a serverless model, you only pay for the resources that are actually consumed, not the resources that are pre-allocated. This can significantly reduce costs.

Reduced operational burden: Serverless computing eliminates the need for server and infrastructure management, allowing developers to focus on writing code and implementing business logic.

Disadvantages

Cold start problem: When a function has not been called for some time, the next call may take longer to start, which is called a "cold start." This can affect the performance of your application.

Vendor lock-in: Since the implementation of serverless platforms can vary between different cloud service providers, the issue of vendor lock-in, where it is difficult to migrate applications from one platform to another, can arise.

Stateless: Serverless functions are usually stateless, which means that each execution of the function is independent. If your application needs to maintain a state, you may need to use an external service or database.

Application scenario

Event-driven applications: Serverless computing is ideal for handling event-based applications, such as responding to HTTP requests, processing database updates, or responding to signals from IoT devices.

Microservices: Serverless computing can be used to build microservices architectures. Each service is a stand-alone function that can be developed, deployed, and extended independently.

Real-time file processing: Serverless computing can also be used to process files in real-time. For example, when a user uploads a file to cloud storage, a function can be triggered to process this file for image recognition or video transcoding.

What are Containers?

Containerization is a virtualization technique that allows you to run and manage applications and their dependencies in an isolated environment. These isolated environments are called "containers." The main tools for containerization include Docker and Kubernetes.

Docker is an open-source containerized platform that allows developers to package their applications and dependencies into a portable container and then run that container on any machine that supports Docker.

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containers. It can run in a clustered environment and across multiple clouds or hybrid cloud environments.

Advantages

Portability: Because the container contains the application and all its dependencies, it can run in any Docker-enabled environment, whether that environment is a developer's PC, a test server, or a cloud environment.

Efficient use of resources: Containers are lighter, start up faster, and use resources more efficiently than traditional virtual machines. This is because the container runs directly on the host's operating system without the need to emulate the entire operating system.

Consistency across environments: Containerization ensures that applications behave consistently across environments, which reduces the "will work on my machine" problem.

Disadvantages

Management complexity: While containerization can make development and deployment more efficient, managing and maintaining containerized environments, especially in large-scale and distributed environments, can be complex.

Security concerns: Containers share the host's operating system kernel, and if the container is compromised, an attacker may gain access to the host. Therefore, container security needs special attention.

Application scenario

Microservices architecture: Containerization is a good fit for microservices architecture because each microservice can be packaged into a separate container that can be developed, deployed, and extended independently.

Continuous integration/Continuous Deployment (CI/CD): Containerization can simplify the CI/CD process. Developers can build and test containers locally and then push containers into production.

Hybrid cloud deployment: Due to the portability of containers, they can be easily run in different cloud environments or hybrid cloud environments. This makes containers ideal for hybrid cloud deployments.

Comparison between Serverless and Containers

Scalability Comparison

Serverless computing provides automatic scalability. When the demand for an application increases, serverless platforms can automatically increase resources to meet the demand. While tools such as Kubernetes can provide some degree of automatic scaling in containerized environments, they often require more configuration and administration.

Cost Comparison

In a serverless model, you only pay for the resources that are actually consumed, not the resources that are pre-allocated. This can greatly reduce costs. In a containerized environment, you may have to pay for resources that run continuously, even if they are not fully utilized.

Comparison of Operational Responsibilities

In a serverless environment, the cloud provider is responsible for managing and maintaining the infrastructure, and developers only need to focus on writing code. In a containerized environment, while container management platforms such as Kubernetes can help manage containers, developers or operations personnel are still responsible for managing and maintaining containers and container orchestration platforms.

Portability Comparison

Containerized applications are highly portable because containers can run in any environment that supports Docker. Serverless computing can suffer from vendor lock-in because different serverless platforms may have different APIs and services.

Comparison of Application Performance

Serverless computing can be affected by the cold start problem, that is, after a function has not been called for some time, the next call may take longer to start up. Containerized applications, on the other hand, often provide more consistent performance because once the container is started, it can run continuously and respond quickly to requests.

In general, serverless computing and containerization each have their advantages, and which technology you choose depends on your specific needs and scenario.

How to Choose between Serverless and Containers?

When choosing between serverless and containers, you need to consider the following factors:

Application Requirements

If your application requires a lot of custom configuration or needs to use a specific operating system or software, then containers may be a better choice because they offer more flexibility. If your application is primarily event-driven or requires fast, automatic scaling, serverless may be better.

Cost

Serverless computing is often billed for resources actually used, which can be more economical than containerization, especially for applications with erratic traffic or short usage times. For applications that need to run for a long time, containers may be more economical because you can better control and optimize resource usage.

Scalability Requirements

Serverless computing provides automatic scalability, which is useful for applications that need to respond quickly to changes in traffic. Containers, while scalable, may require more configuration and management.

Team Expertise

If your team is already familiar with Docker and Kubernetes, it may be easier to work with containers. If your team is more familiar with specific programming languages and frameworks than infrastructure management, serverless might be a better fit.

Finally, choosing the right tool for the right job is important, not a one-size-fits-all solution. Serverless and containers are both powerful tools, but they suit different scenarios and needs. In practical use, you may find that serverless and containers can be used in combination to take full advantage of their respective strengths.

Tencent EdgeOne Edge Functions

If you're considering serverless for your application deployment, we highly recommend Tencent EdgeOne Edge Functions. By utilizing EdgeOne's global network for your application deployment, you can harness the advantages of serverless computing, allowing you to concentrate more on development and improving user experience. Streamline your operations with the power of serverless technology.